5 Ways Remove Duplicates

Introduction to Removing Duplicates

Removing duplicates from a dataset, list, or any collection of items is a common task across various fields, including data analysis, programming, and database management. Duplicates can lead to inaccurate analysis, inefficient use of resources, and cluttered datasets. In this article, we will explore five ways to remove duplicates, considering different scenarios and tools.

Understanding Duplicates

Before diving into the methods of removing duplicates, it’s essential to understand what constitutes a duplicate. A duplicate is an exact copy of an item already present in a dataset or list. The criteria for determining duplicates can vary depending on the context. For instance, in a list of names, duplicates might be considered based on the full name, while in a dataset of products, duplicates could be identified by product codes or descriptions.

Method 1: Manual Removal

Manual removal of duplicates involves manually reviewing a list or dataset and removing any duplicate entries. This method is feasible for small datasets but becomes impractical and time-consuming for larger ones. It requires careful attention to detail to ensure that all duplicates are identified and removed correctly.

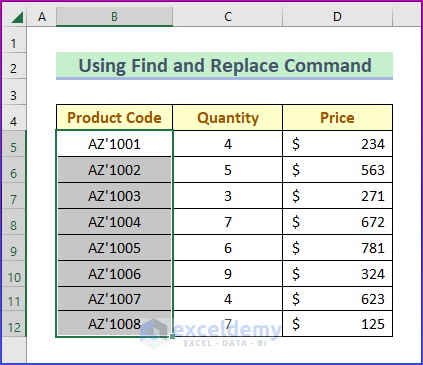

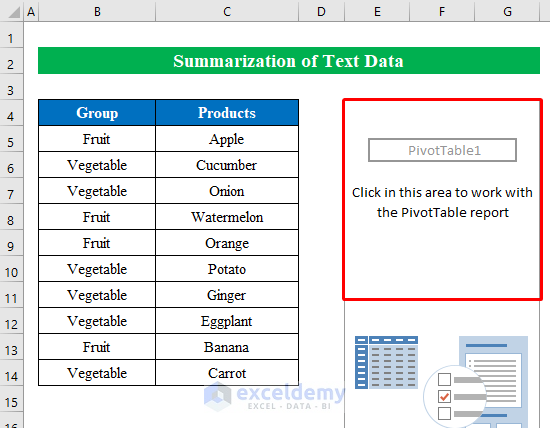

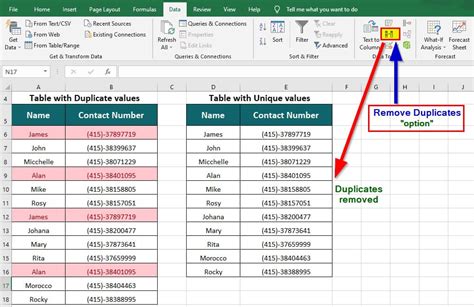

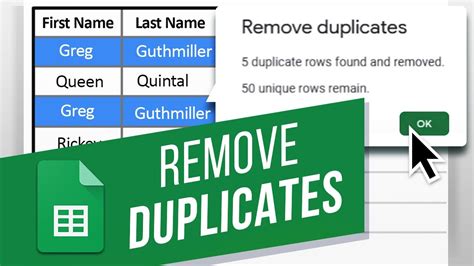

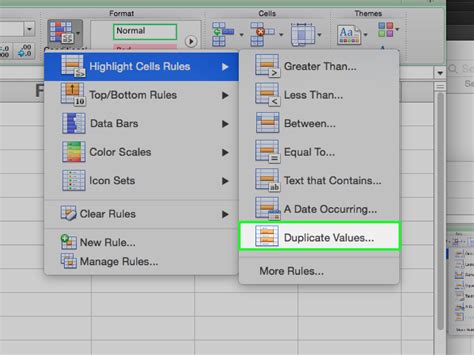

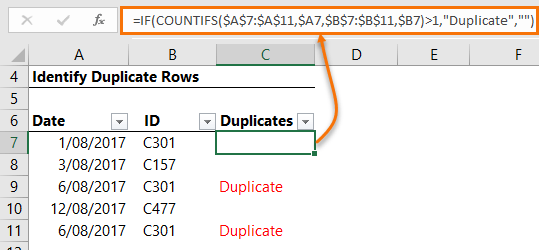

Method 2: Using Spreadsheet Functions

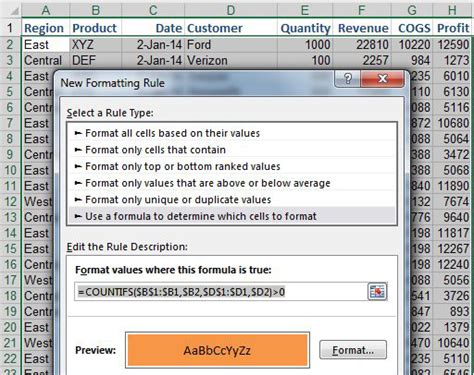

For datasets managed in spreadsheets like Microsoft Excel or Google Sheets, there are built-in functions and tools to remove duplicates. These tools can automatically identify and remove duplicate rows based on one or more columns. The process typically involves selecting the range of data, going to the “Data” menu, and choosing the “Remove Duplicates” option. This method is efficient for managing small to medium-sized datasets.

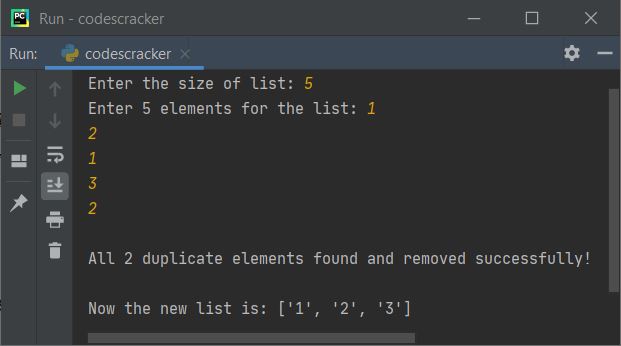

Method 3: Programming Languages

In programming, removing duplicates can be achieved through various algorithms and data structures, depending on the language and the nature of the data. For example, in Python, you can use sets to remove duplicates from lists because sets only allow unique elements. Similarly, in languages like Java or C++, you can use hash sets or implement custom algorithms for duplicate removal.

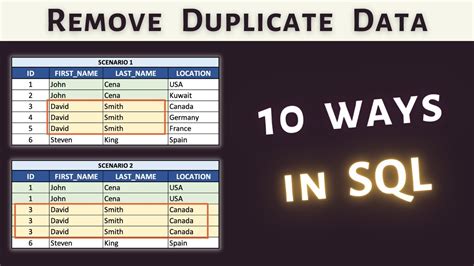

Method 4: Database Queries

In database management systems (DBMS), removing duplicates can be done using SQL queries. The DISTINCT keyword is used to select only unique (distinct) records. For removing duplicate rows from a table, you might use a combination of SELECT DISTINCT and other operations, depending on the database system you are using. This method is particularly useful for managing large datasets stored in databases.

Method 5: Dedicated Data Cleaning Tools

There are also dedicated data cleaning tools and software that offer advanced features for removing duplicates, among other data cleansing tasks. These tools can handle large datasets efficiently and often provide more sophisticated criteria for identifying duplicates, such as fuzzy matching for text data. They are particularly useful for data analysts and scientists who regularly work with large and complex datasets.

📝 Note: When removing duplicates, it's crucial to backup your original dataset to prevent loss of data in case something goes wrong during the removal process.

Comparison of Methods

Each method has its advantages and disadvantages. Manual removal is simple but time-consuming and prone to human error. Spreadsheet functions are convenient for small datasets but may not be efficient for very large ones. Programming approaches offer flexibility and power but require programming knowledge. Database queries are ideal for data already stored in databases but might require learning SQL. Dedicated data cleaning tools offer efficiency and advanced features but can be costly.

| Method | Advantages | Disadvantages |

|---|---|---|

| Manual Removal | Simple, no special skills required | Time-consuming, prone to errors |

| Spreadsheet Functions | Easy to use, built-in tools | Limited to spreadsheet size and complexity |

| Programming Languages | Flexible, powerful, scalable | Requires programming knowledge |

| Database Queries | Efficient for large datasets, precise | Requires SQL knowledge, database setup |

| Dedicated Data Cleaning Tools | Advanced features, efficient, scalable | Can be costly, requires learning the tool |

In summary, the choice of method for removing duplicates depends on the size and complexity of the dataset, the tools and skills available, and the specific requirements of the task at hand. Whether you’re working with small lists or large datasets, understanding the different approaches to removing duplicates is essential for efficient and accurate data management.

What is the most efficient way to remove duplicates from a large dataset?

+

The most efficient way often involves using dedicated data cleaning tools or programming languages, which can handle large datasets and offer scalable solutions.

How do I remove duplicates in Excel?

+

In Excel, you can remove duplicates by selecting the range of data, going to the “Data” menu, and choosing the “Remove Duplicates” option.

Can I use SQL to remove duplicates from a database table?

+

Yes, SQL can be used to remove duplicates from a database table. The DISTINCT keyword is used to select only unique records, and other operations can be combined to remove duplicate rows.