5 Ways Consolidate Duplicates

Introduction to Consolidating Duplicates

When dealing with large datasets or lists, one common issue that arises is the presence of duplicate entries. These duplicates can lead to inaccuracies in analysis, waste storage space, and complicate data management. Consolidating duplicates is a crucial step in data cleaning and preprocessing, ensuring that each piece of information is unique and contributes meaningfully to the dataset. This process can be applied across various domains, from customer databases in marketing to genomic sequences in bioinformatics. In this article, we will explore five ways to consolidate duplicates, highlighting their importance, methods, and applications.

Understanding the Importance of Consolidating Duplicates

Before diving into the methods of consolidating duplicates, it’s essential to understand why this process is vital. Duplicates can arise from various sources, including human error during data entry, automatic generation of data, or the merging of datasets. The presence of duplicates can: - Skew Analysis Results: Duplicates can lead to overrepresentation of certain data points, affecting statistical analysis and decision-making. - Waste Resources: Storage and processing of duplicate data can be costly, especially in big data scenarios. - Complicate Data Retrieval: Finding specific information can become more challenging when dealing with duplicate entries.

5 Ways to Consolidate Duplicates

Consolidating duplicates involves identifying and either removing or merging duplicate entries to ensure data integrity. Here are five strategies to achieve this:

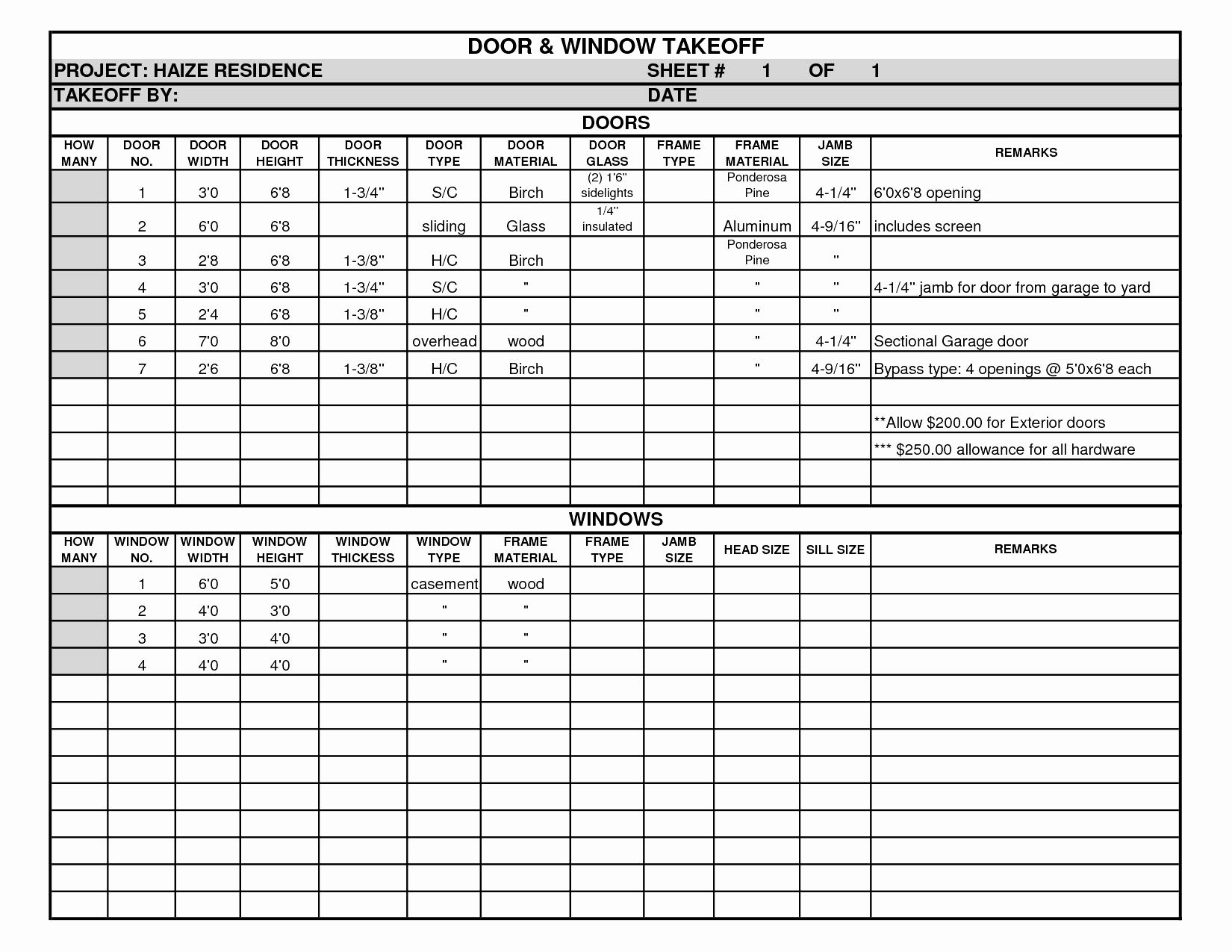

- Manual Removal: This involves manually going through the dataset to identify and remove duplicates. While time-consuming and prone to human error, it’s straightforward and can be effective for small datasets.

- Using Unique Identifiers: Assigning a unique identifier to each entry can help in automatically identifying duplicates. This method is particularly useful in databases where each record should represent a unique entity.

- Data Profiling: This method involves analyzing data patterns to identify potential duplicates. It’s more complex and requires some level of data analysis expertise but can be very effective, especially when combined with other methods.

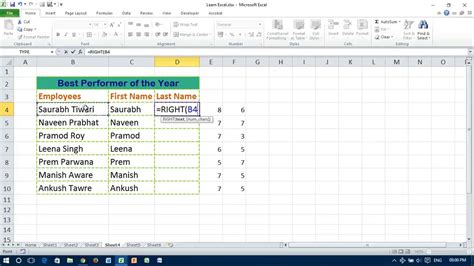

- Algorithmic Approaches: Various algorithms can be employed to detect duplicates based on similarity measures. For example, in text data, algorithms like Levenshtein Distance can measure the differences between strings to identify duplicates or near-duplicates.

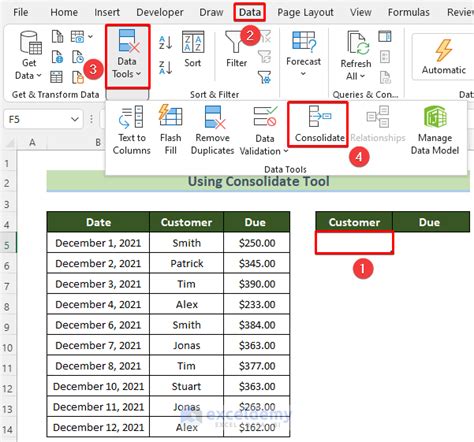

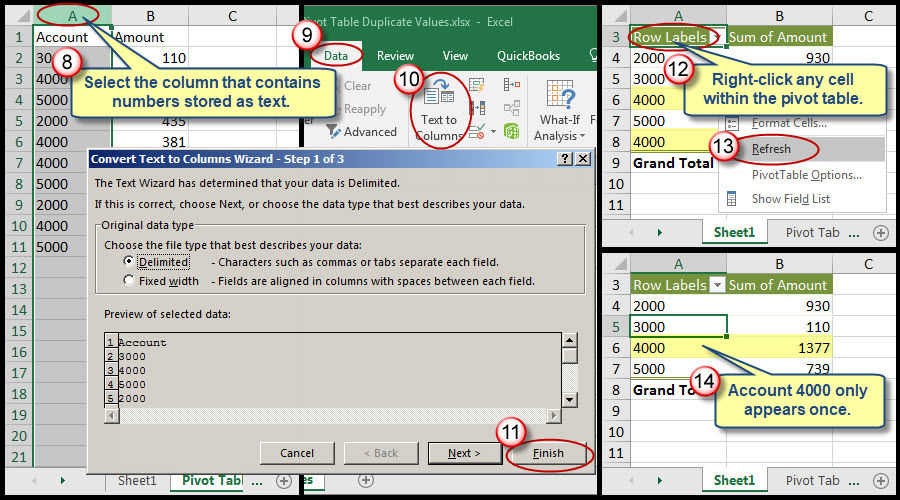

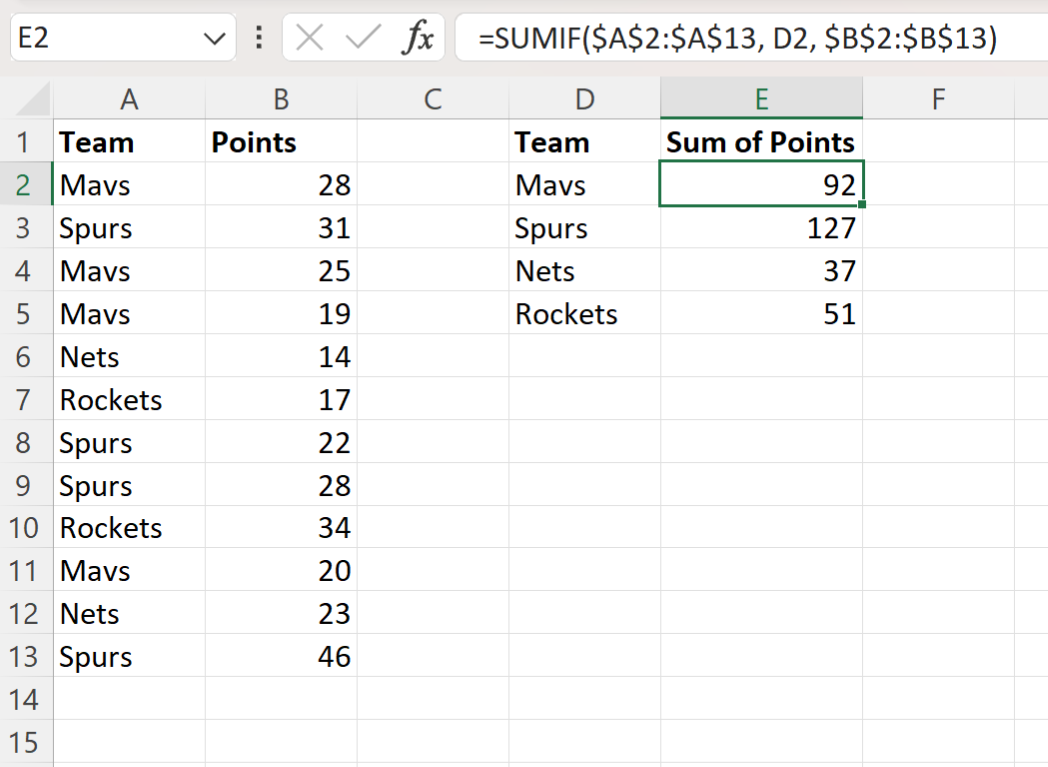

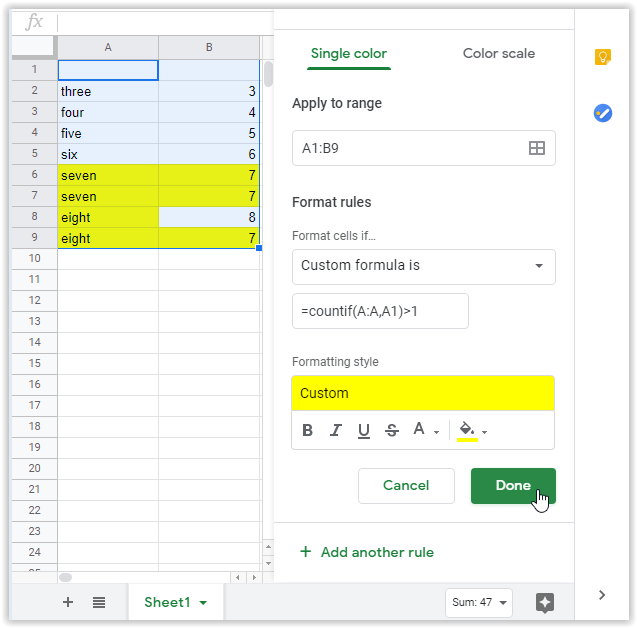

- Automated Tools and Software: Utilizing specialized software and tools designed for data cleaning can significantly streamline the process of consolidating duplicates. These tools often come with built-in algorithms for duplicate detection and can handle large datasets efficiently.

Applications of Consolidating Duplicates

The applications of consolidating duplicates are vast and varied, impacting numerous fields:

- Marketing and Sales: Removing duplicate customer entries can improve the accuracy of marketing campaigns and customer relationship management.

- Bioinformatics: In genomic studies, consolidating duplicate sequences can help in identifying unique genetic markers.

- Financial Analysis: Duplicate transactions can skew financial analysis; removing them ensures more accurate financial reporting and forecasting.

Best Practices for Consolidating Duplicates

When consolidating duplicates, several best practices should be kept in mind: - Backup Data: Before making any changes, ensure that the original dataset is backed up to prevent loss of information. - Test Algorithms: Especially when using automated tools or algorithms, test them on a small subset of the data to ensure they are working as expected. - Document Changes: Keep a record of changes made to the dataset, including the removal or merging of duplicates, for transparency and accountability.

📝 Note: The approach to consolidating duplicates can significantly affect the outcomes of data analysis. Therefore, it's crucial to carefully evaluate the methods used based on the specific characteristics of the dataset and the goals of the analysis.

In summary, consolidating duplicates is a critical step in data preprocessing that can significantly impact the accuracy and efficiency of data analysis and management. By understanding the importance of this process and applying the appropriate methods, individuals and organizations can ensure their datasets are clean, reliable, and contribute meaningfully to informed decision-making.

What are the common causes of duplicates in datasets?

+

Duplicates in datasets can arise from human error during data entry, automatic generation of data, or the merging of datasets without proper reconciliation.

How does consolidating duplicates improve data analysis?

+

Consolidating duplicates ensures that each data point is unique, preventing the skewing of analysis results, reducing storage needs, and simplifying data retrieval, thereby improving the overall accuracy and efficiency of data analysis.

What tools are available for automatically consolidating duplicates?

+

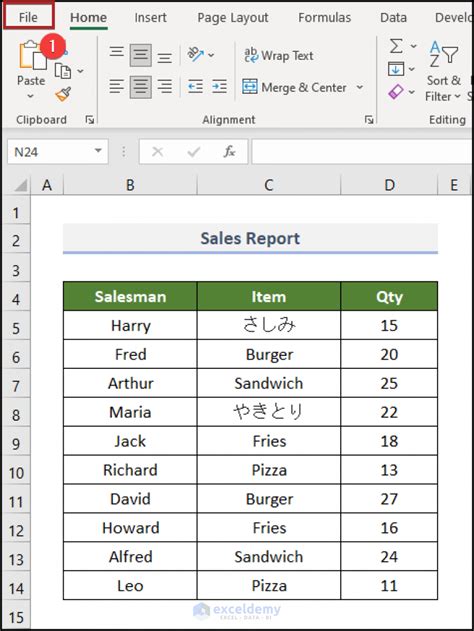

Various software and tools are designed for data cleaning and preprocessing, including spreadsheet programs like Microsoft Excel, dedicated data cleaning tools, and programming libraries in languages like Python and R.