5 Ways Scrape Data

Introduction to Data Scraping

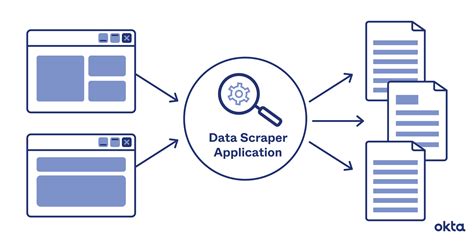

Data scraping, also known as web scraping, is the process of automatically extracting data from websites, web pages, and online documents. It has become a crucial tool for businesses, researchers, and individuals who need to collect and analyze large amounts of data from the internet. In this article, we will explore five ways to scrape data, including the tools and techniques used in each method.

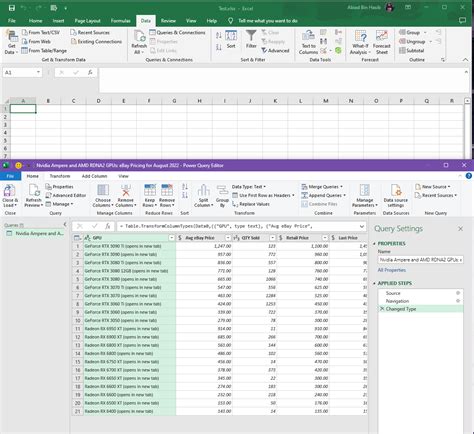

Method 1: Using Web Scraping Software

One of the most common methods of data scraping is using web scraping software. These tools are designed to navigate websites, extract data, and store it in a structured format. Some popular web scraping software includes: * Scrapy: An open-source Python framework for building web scrapers. * Beautiful Soup: A Python library used for parsing HTML and XML documents. * Selenium: An automation tool that can be used for web scraping, especially for websites that use JavaScript.

These tools can be used to extract data from websites, including text, images, and videos. They can also be used to handle common web scraping challenges, such as handling forms, dealing with cookies, and avoiding anti-scraping measures.

Method 2: Using APIs

Another way to scrape data is by using Application Programming Interfaces (APIs). APIs are designed to provide access to data in a structured format, making it easier to extract and use the data. Many websites provide APIs for accessing their data, including: * Twitter API: For accessing Twitter data, such as tweets and user information. * Facebook API: For accessing Facebook data, such as user information and page insights. * Google API: For accessing Google data, such as search results and maps data.

Using APIs can be a more efficient and reliable way to scrape data, as it eliminates the need to navigate websites and parse HTML documents. However, APIs often have rate limits and usage restrictions, which can limit the amount of data that can be extracted.

Method 3: Using Browser Extensions

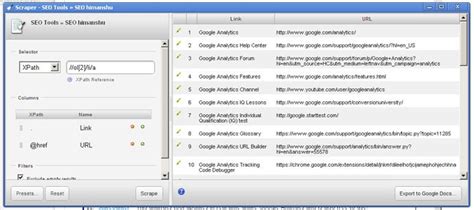

Browser extensions are another way to scrape data, especially for small-scale data extraction projects. These extensions can be installed in web browsers, such as Google Chrome or Mozilla Firefox, and can be used to extract data from websites. Some popular browser extensions for data scraping include: * Scraper: A Chrome extension for extracting data from web pages. * Web Scraper: A Firefox extension for extracting data from web pages. * Data Miner: A Chrome extension for extracting data from web pages and converting it into a CSV file.

Browser extensions can be a convenient way to scrape data, as they are easy to install and use. However, they may not be as powerful as web scraping software or APIs, and may have limitations in terms of the amount of data that can be extracted.

Method 4: Using Online Data Scraping Tools

Online data scraping tools are web-based platforms that provide data scraping services. These tools can be used to extract data from websites, without the need to install any software or write any code. Some popular online data scraping tools include: * ParseHub: A web-based platform for extracting data from websites. * Import.io: A web-based platform for extracting data from websites and converting it into a CSV file. * Scrapinghub: A web-based platform for extracting data from websites and providing data scraping services.

Online data scraping tools can be a convenient way to scrape data, as they are easy to use and do not require any technical expertise. However, they may have limitations in terms of the amount of data that can be extracted, and may require payment for large-scale data extraction projects.

Method 5: Using Machine Learning Algorithms

Machine learning algorithms can be used to scrape data, especially for complex data extraction projects. These algorithms can be trained to navigate websites, extract data, and store it in a structured format. Some popular machine learning algorithms for data scraping include: * Supervised learning: For training algorithms to extract specific data from websites. * Unsupervised learning: For training algorithms to extract patterns and relationships from data. * Deep learning: For training algorithms to extract complex data from websites, such as images and videos.

Machine learning algorithms can be a powerful way to scrape data, as they can handle complex data extraction tasks and provide high-quality data. However, they require technical expertise and large amounts of training data, which can be a challenge for small-scale data extraction projects.

💡 Note: Data scraping should be done in accordance with the terms of service of the website being scraped, and should respect the privacy and intellectual property rights of the website owner.

In summary, there are several ways to scrape data, including using web scraping software, APIs, browser extensions, online data scraping tools, and machine learning algorithms. Each method has its own advantages and disadvantages, and the choice of method depends on the specific data extraction project and the technical expertise of the user.

To further illustrate the differences between these methods, the following table provides a comparison of the five methods:

| Method | Advantages | Disadvantages |

|---|---|---|

| Web Scraping Software | Flexible, powerful, and scalable | Requires technical expertise, can be time-consuming to set up |

| APIs | Efficient, reliable, and structured data | Rate limits, usage restrictions, and requires API key |

| Browser Extensions | Convenient, easy to use, and scalable | Limited functionality, may not be as powerful as web scraping software |

| Online Data Scraping Tools | Convenient, easy to use, and scalable | May have limitations in terms of data extraction, requires payment for large-scale projects |

| Machine Learning Algorithms | Powerful, flexible, and scalable | Requires technical expertise, large amounts of training data, and can be time-consuming to set up |

Ultimately, the choice of method depends on the specific data extraction project, the technical expertise of the user, and the desired outcome. By understanding the advantages and disadvantages of each method, users can make informed decisions and choose the best approach for their data scraping needs.

The main points to consider when choosing a data scraping method include the complexity of the project, the amount of data to be extracted, the technical expertise of the user, and the desired outcome. By considering these factors, users can select the most suitable method for their data scraping needs and achieve their goals efficiently and effectively.

What is data scraping?

+

Data scraping is the process of automatically extracting data from websites, web pages, and online documents.

What are the benefits of data scraping?

+

The benefits of data scraping include the ability to collect and analyze large amounts of data, improve business decision-making, and gain insights into customer behavior and market trends.

What are the challenges of data scraping?

+

The challenges of data scraping include handling anti-scraping measures, dealing with complex website structures, and ensuring the quality and accuracy of the extracted data.