5 Ways Remove Duplicates

Introduction to Removing Duplicates

Removing duplicates from a dataset, list, or any collection of items is a crucial step in data preprocessing for various applications, including data analysis, scientific research, and business intelligence. Duplicates can skew results, lead to incorrect conclusions, and unnecessarily increase the size of datasets, making them harder to manage and analyze. This post explores five effective ways to remove duplicates, focusing on methods applicable across different contexts, including spreadsheet software like Microsoft Excel, programming languages such as Python, and database management systems.

Understanding Duplicates

Before diving into the methods for removing duplicates, it’s essential to understand what constitutes a duplicate. A duplicate is an exact copy of an item already present in a dataset. The definition of “exact” can vary depending on the context; for example, in a list of names, duplicates might be considered case-insensitive (e.g., “John” and “john” are the same), while in other scenarios, case sensitivity might be crucial.

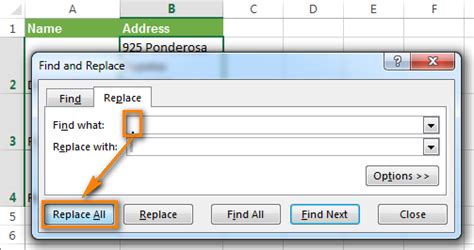

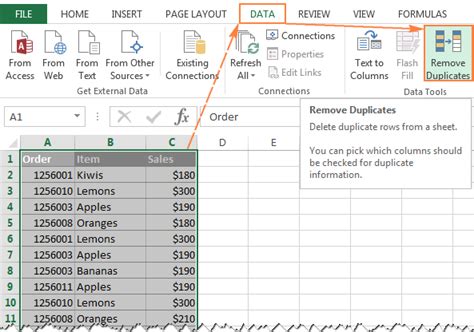

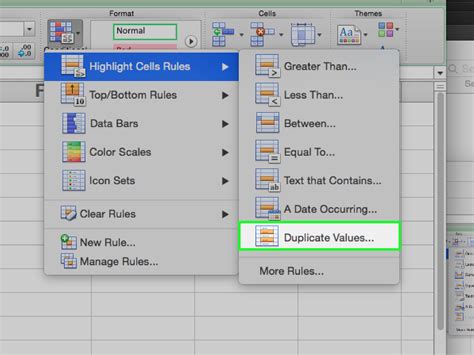

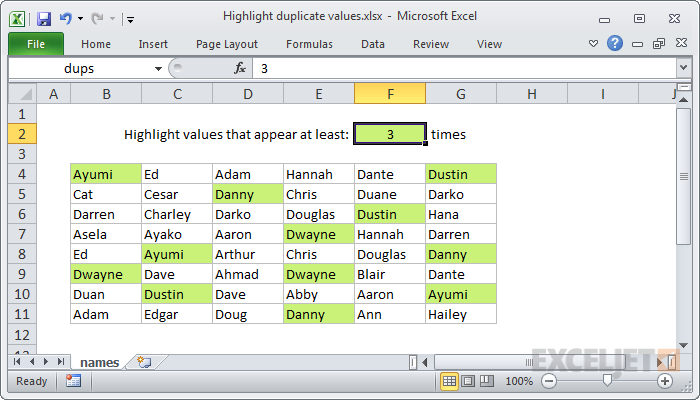

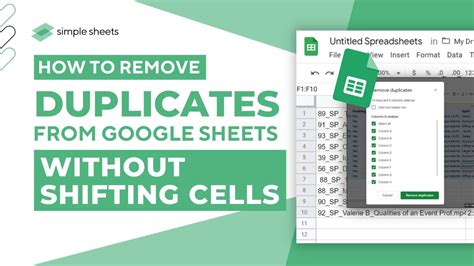

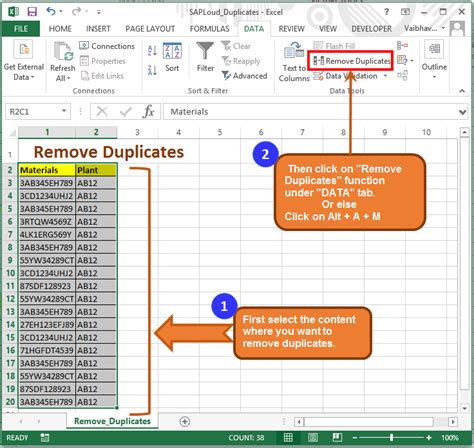

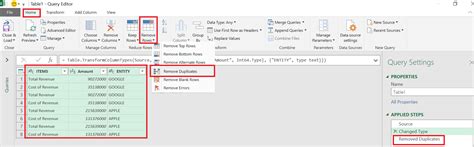

Method 1: Using Spreadsheet Software

Spreadsheet software like Microsoft Excel and Google Sheets provides built-in features to remove duplicates. This method is straightforward and user-friendly, especially for those without extensive programming knowledge. - Select the range of cells you want to remove duplicates from. - Go to the “Data” tab in the ribbon. - Click on “Remove Duplicates”. - Choose the columns you want to consider for duplicate removal. - Click “OK”.

📝 Note: This method modifies the original data. It's advisable to create a backup or work on a copy to avoid losing any information.

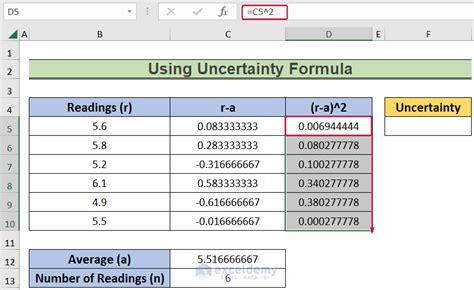

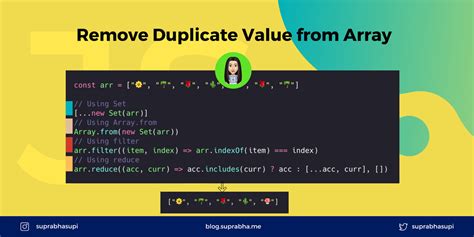

Method 2: Programming Languages - Python

Python, with its extensive libraries, offers efficient ways to remove duplicates from lists and datasets. The most common method involves converting a list to a set, which automatically removes duplicates because sets in Python cannot contain duplicate values.

my_list = [1, 2, 2, 3, 4, 4, 5, 6, 6]

my_set = set(my_list)

print(my_set)

For more complex datasets, such as those stored in pandas DataFrames, you can use the drop_duplicates method.

import pandas as pd

# Sample DataFrame

data = {'Name': ['Tom', 'Nick', 'John', 'Tom'],

'Age': [20, 21, 19, 20]}

df = pd.DataFrame(data)

# Remove duplicates based on 'Name'

df_unique = df.drop_duplicates(subset='Name', keep='first')

print(df_unique)

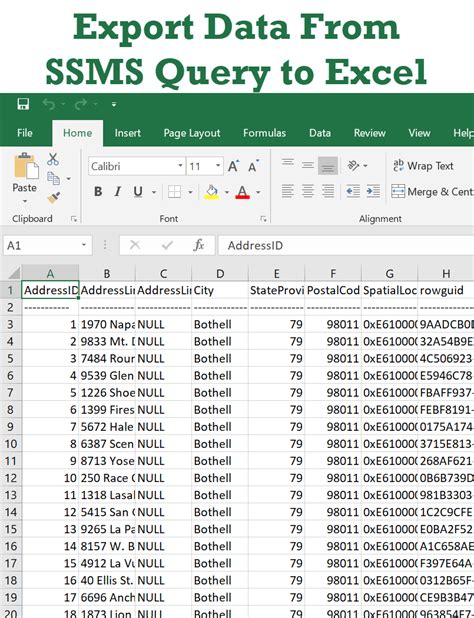

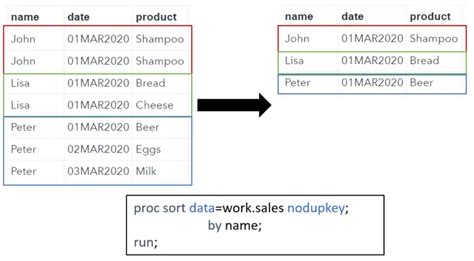

Method 3: Database Management Systems - SQL

In database management systems, removing duplicates can be achieved using SQL queries. The

DISTINCT keyword is used to select only unique (i.e., distinct) records.

SELECT DISTINCT column1, column2

FROM tablename;

For scenarios where you need to remove duplicates based on certain conditions or when you want to delete duplicate rows from a table, more complex queries involving subqueries or joins might be necessary.

Method 4: Manual Removal

In small datasets or specific situations where automation is not feasible, manual removal of duplicates might be the most straightforward approach. This involves manually reviewing each item in the dataset and deleting or marking duplicates. While time-consuming and prone to human error, this method can be useful for ensuring accuracy in critical applications or when working with datasets that require a high level of personal judgment.

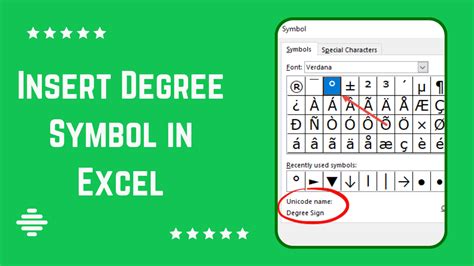

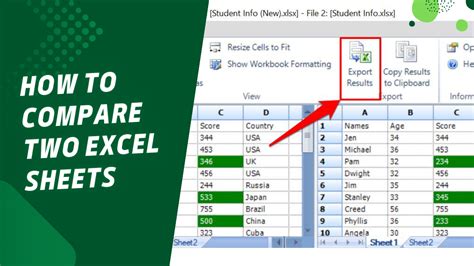

Method 5: Using Third-Party Tools and Add-ons

Several third-party tools and add-ons are available for removing duplicates from specific types of data, such as email lists, contact information, or text files. These tools often provide more advanced features than built-in software functions, such as fuzzy matching for finding duplicates based on similarity rather than exact matches. Examples include add-ins for Microsoft Excel and standalone applications designed for data cleansing.

| Method | Description | Applicability |

|---|---|---|

| Spreadsheet Software | Using built-in features like "Remove Duplicates" in Excel or Google Sheets. | General datasets, especially those already in spreadsheet format. |

| Programming Languages | Utilizing libraries and functions in languages like Python for duplicate removal. | Large datasets, complex data manipulation, and automation tasks. |

| Database Management Systems | Employing SQL queries with keywords like DISTINCT for unique record selection. | Structured data stored in databases, requiring efficient query-based operations. |

| Manual Removal | Manually reviewing and deleting duplicates, often in small or critical datasets. | Small datasets or situations requiring human judgment and precision. |

| Third-Party Tools | Using specialized software or add-ons for advanced duplicate removal features. | Specific data types or scenarios needing fuzzy matching, advanced filtering, etc. |

In summary, removing duplicates is a critical process in data management that can be accomplished through various methods, each suited to different contexts and types of data. Whether using spreadsheet software, programming languages, database queries, manual removal, or third-party tools, the key is selecting the method that best fits the specific requirements of your dataset and application.

What is the most efficient way to remove duplicates from a large dataset?

+

The most efficient way often involves using programming languages like Python, especially when combined with powerful libraries such as pandas for data manipulation.

Can I remove duplicates from a dataset while preserving the original order of items?

+

Yes, methods like using Python’s dict.fromkeys() function (for Python 3.7 and later) can remove duplicates while preserving the original order, as dictionaries in Python maintain the insertion order.

How do I handle duplicates in a dataset where two records are similar but not exactly the same?

+

This scenario often requires using fuzzy matching techniques, which can be implemented through specific algorithms or libraries available in programming languages. These methods allow for the identification and potential removal of records that are similar but not identical.